Human Centered AI Transparency

Aug 2022- Jul 2024

Research Question

How do users experience, perceive, or relate to the workings and effects of AI systems that mediate their everyday lives? In this work, I explore this broader question in the context of civic AI systems, due to their dominant yet highly invisible nature. Specifically, I select predictive policing as a case study. Predictive policing systems have been in existence for over a decade now making it an ideal case to answer this question.

Methods

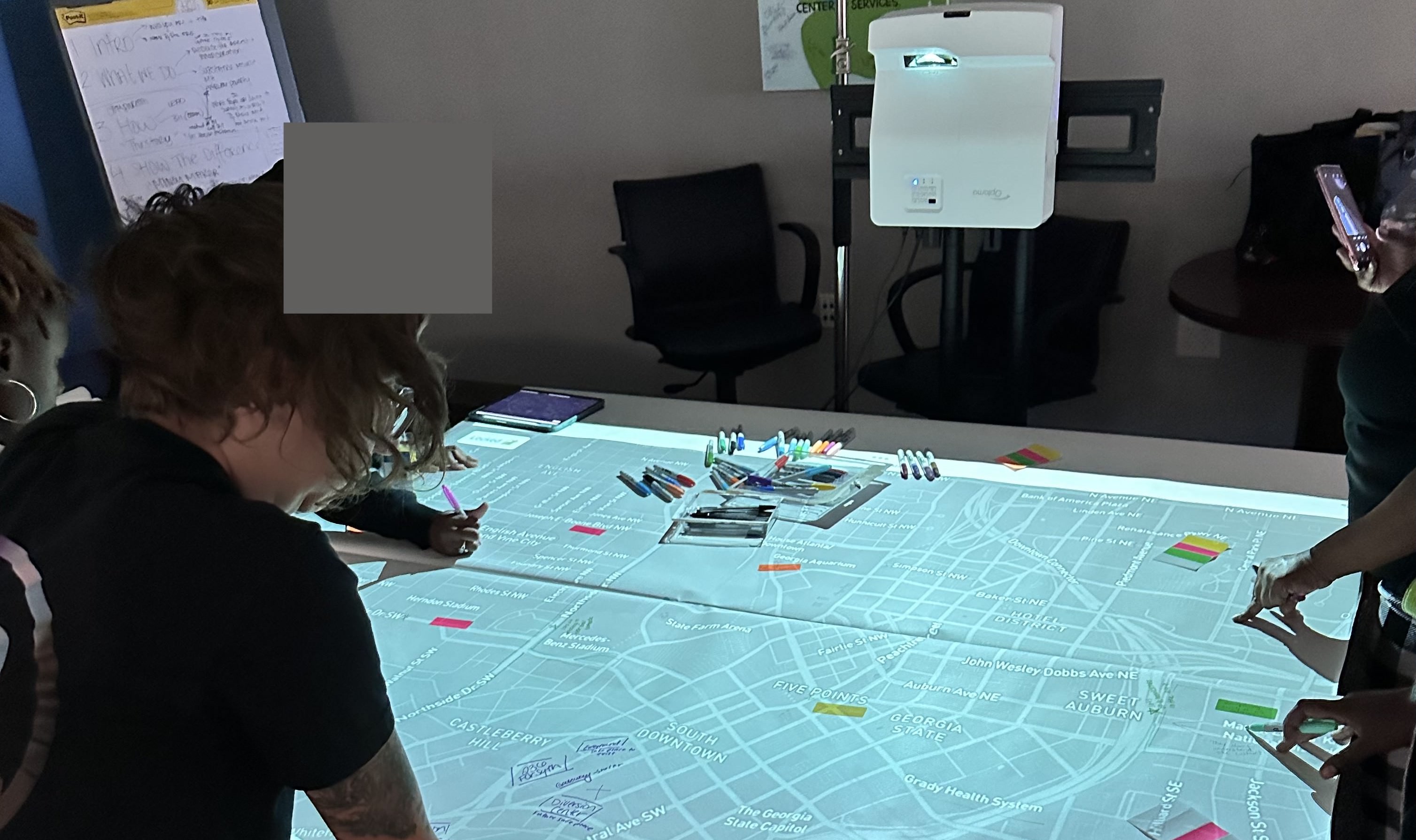

To answer this question, I employed a ‘research through design’ methodology and conducted eight participatory mapping workshops with diverse publics such as— police reform groups, city planners, neighborhood and community leaders, civic development agency, educators, and students.

The workshop call can be found here. The workshops were conducted in person in Atlanta, Georgia located in the Southeast United States. They were scheduled to be 90 mins long. Before each workshop, a short survey was shared with the participants. The survey served two primary purposes: (1) it confirmed participation through an informal registration process, and (2) it provided workshop moderators with background information that was helpful for unique workshops.

In the workshop, participants were asked to make spatial predictions as an AI system would. They identified places on the map that they were familiar with and believed a predictive tool may identify as a crime hotspot. They were then asked to reflect on their predictions by considering their own reasoning and the effects of their predictions. The workshops followed a loose protocol that guided such reflection. Participants contemplated an AI system’s prediction goal, data type, data source and amount, spatial aggregation of data, and prediction effects in relation to their own speculations. In doing so, moderators prompted participants to consider the socio-political qualities of places they identified in relation to these technical aspects of the AI system. A focus on specific locations revealed spatial, social, political, and historical characteristics relevant to the technical workings of the predictive tool.

The protocol was documented as a toolkit and shared as a take-away artifact with the workshop participants to support their questioning of AI systems beyond the workshops. It is essential to note that this structure of the toolkit was not rigid and merely acted as a guide to the conversation while allowing the group to deviate into explaining and questioning components of AI systems that were most relevant.

Findings

Users' explanation needs

Effective user explanations consist of processes (1) that are situated in the lives of diverse publics, (2) explain the complex and entangled socio-technical systems that predictive tools interact with, (3) in- volve ongoing and partial processes, and lastly (4) empower publics to act in ways that promote democratic deployment and regulation of predictive tools.

Users' relation to AI

Users' relate to algorithmic contexts through (1) the predictive domain: the service domain that an AI model becomes a part of— in our case policing, (2) the prediction subject: the people or places that are subject to predictions—in our case neighborhoods, (3) the predictive backdrop: the local and global environment that surrounds predictive systems, and (4) the predictive tool: the tools and models that make predictions.

Users' ability to identify AI's social impacts

Locals directly engage with components of AI systems outside of the ‘black box’ and can make known, amongst other aspects, the environments AI tools are deployed in, the cultures and norms they invade, and the lived experiences of the problems they attempt to address. As such, local publics possess partial explanations of AI systems that can come together to raise meaningful and grounded questions about the effects of AI systems.

Impacts

In this work, I worked with diverse community groups to study the workings and impacts of civic AI systems as complex socio-technical assemblages. Such collective investigation helped overcome the epistemic challenges presented by opaque AI technologies, resulting in contextual, grounded, and partial understandings of AI, its impacts, and desired futures.

Additionally it helped promote AI transparency and literacy amongst non-tech stakeholders in accessible and interactive ways. The work was applauded by several local non-profits who showed interest in adapting my methods as long-term community engagement and education strategies.

Ultimately, this research serves as a guide for AI researchers, civic organizations, as well as policymakers, as they work together to develop safe and equitable AI systems.

A few groups documented their workshop experiences in the form of blogs Linkedin posts. Click here and here for examples.

RELATED PUBLICATIONS

1. Good Enough Explanations: How Can Local Publics Understand and Explain Civic Predictive Systems?. Shubhangi Gupta. PhD Dissertation. Georgia Institute of Technology.

1. Making Smart Cities Explainable: What XAI Can Learn from the “Ghost Map”. Shubhangi Gupta, Yanni Loukissas. Late Breaking Works paper at CHI 2023.

2. Mapping the Smart City: Participatory approaches to XAI. Shubhangi Gupta. Doctoral Consortium at Designing Interactive Systems (DIS) 2023.